Chat with Open-Source LLMs Locally (Llama, Deepseek, Mistral)

Large Language Models (LLMs) are everywhere — and everyone’s using them. But there’s a big issue emerging: people are feeding sensitive emails and company data into cloud-based LLM services like ChatGPT, DeepSeek, and others. Just to draft a few emails or generate some paragraphs, confidential information is getting handed over to third-party servers.

Sound a little risky? It is.

Thankfully, there’s a safer alternative: open-source LLMs. Hugging Face and Ollama offer a wide range of models that you can run directly on your own machine — keeping all your data private.

However, running open-source LLMs locally can feel overwhelming. You usually need to download large model files (weights), tweak generation parameters like max_length, top_p, and temperature, and sometimes even set up Python scripts, REST APIs, or GitHub repos just to get started.

So how do you make it simple?

Enter Langformers. 🚀

Chat with Open-Source LLMs in Minutes

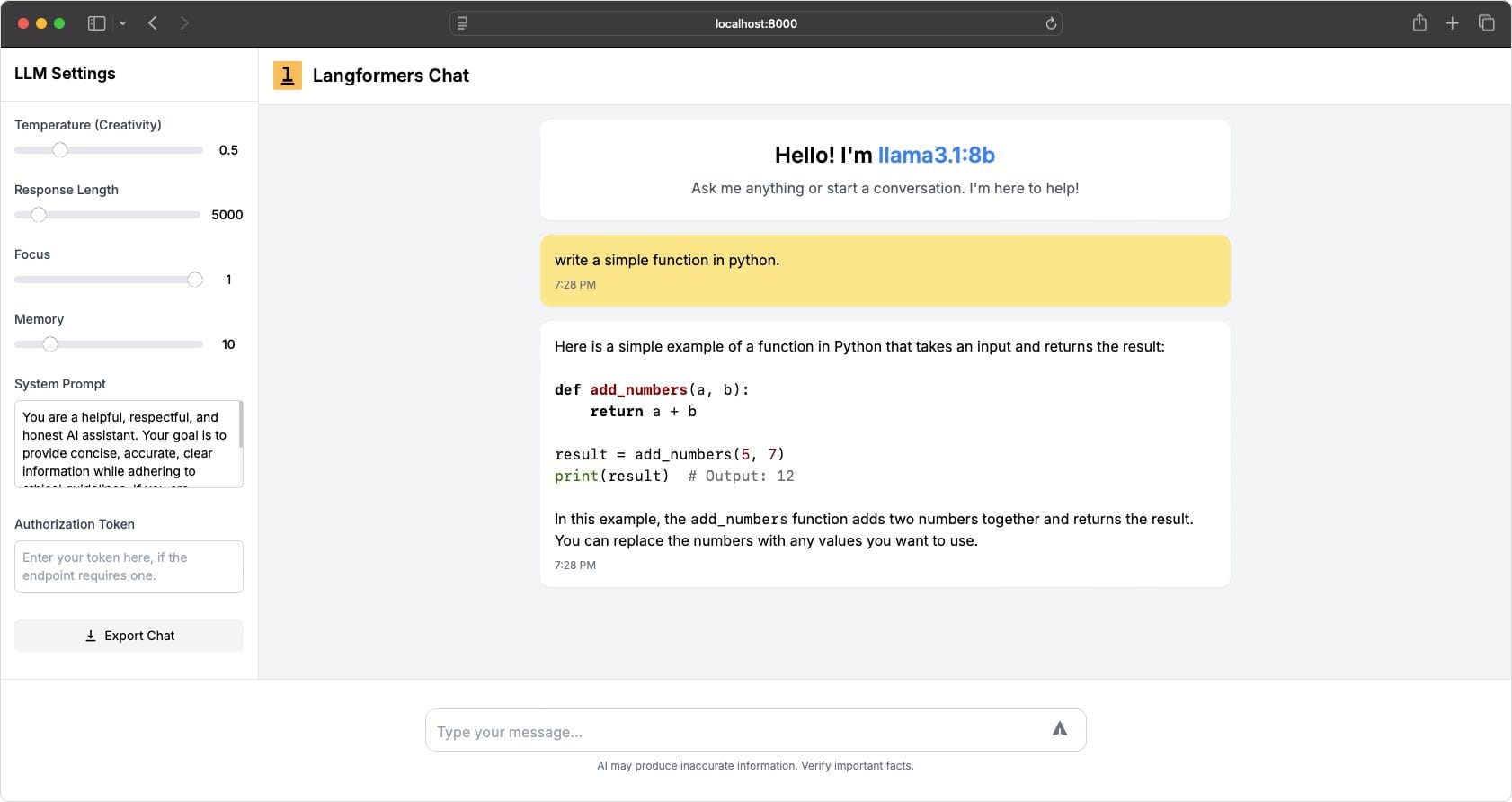

Langformers makes chatting with Hugging Face and Ollama models ridiculously easy — with just three lines of Python code. It provides a beautiful, ChatGPT-style web interface where you can also fine-tune generation settings (like temperature and top-p) directly from the UI.

You’ll feel right at home if you've used ChatGPT, but this time, everything stays on your machine.

Getting Started with Langformers

First, a few quick notes:

- Hugging Face Models: Langformers supports chat-tuned models (those with a

chat_templatein theirtokenizer_config.json) that are compatible with the Transformers library and your hardware.- Example:

meta-llama/Llama-3.2-1B-Instruct— make sure you have access via Hugging Face.

- Example:

- Ollama Models: Ensure you have Ollama installed and the model pulled.

- Install Ollama: Download Ollama

- Pull a model (example):

ollama pull llama3.1:8b

Install Langformers

First, install Langformers using pip:

pip install -U langformersBest practice: It’s recommended to create a virtual environment before installing any Python package globally. Check out Langformers official installation guide if you need help setting that up.

Running Your First Local Chatbot

Create a Python file with the following code:

# Import langformers

from langformers import tasks

# Create a generator

generator = tasks.create_generator(provider="ollama", model_name="llama3.1:8b")

# Run the generator

generator.run(host="0.0.0.0", port=8000)Now, open your browser and navigate to http://0.0.0.0:8000.

You’ll see a full-featured chat interface where you can start chatting with your LLM — fully locally!

Accessing the Chat Interface on Other Devices

Since the server runs on 0.0.0.0, any device on the same Wi-Fi network (like your iPad, mobile phone, or another laptop) can access it using your machine’s IP address.

Good news: Langformers' chat UI is optimized for all screen sizes — it looks and works beautifully even on smaller screens like an iPhone 14 Pro.

Securing Your Chat Interface (Optional)

By default, everyone on your network can access the chat interface. Cool for some scenarios, but if you want to restrict access, Langformers supports easy authentication.

Here’s how you can enable it:

async def auth_dependency():

"""Authorization dependency for request validation.

- Implement your own logic here (e.g., API key check, authentication).

- If the function returns a value, access is granted.

- Raising an HTTPException will block access.

"""

return True # Modify this logic as needed

generator = tasks.create_generator(provider="ollama", model_name="llama3.1:8b", dependency=auth_dependency)The official Langformers documentation has a simple working example for setting up authentication.

Bonus: Use Langformers as a REST API

Besides the web chat UI, Langformers also exposes a REST endpoint — perfect if you want to build your own custom app using your local LLM.

You can find more about this here: Langformers LLM Inference API.